How to interpret SEON's risk scores

Updated on 24.09.25

1 minute to read

Copy link

Overview

SEON’s scoring engine empowers fraud and AML Compliance teams to interpret digital footprint, device intelligence, behavioral biometrics and AML data across multiple dimensions. The scores we provide are designed to surface risk and support decision-making in real time with flexibility across use cases.

Different risk scores surface different types of fraud signals; some look at overall transaction behavior, others zero in on the phone, email, IP or device information. Some scores are based on your rules, while others use sophisticated AI and machine learning models to catch hidden patterns a human might miss.

The Fraud score vs the AI Insights score

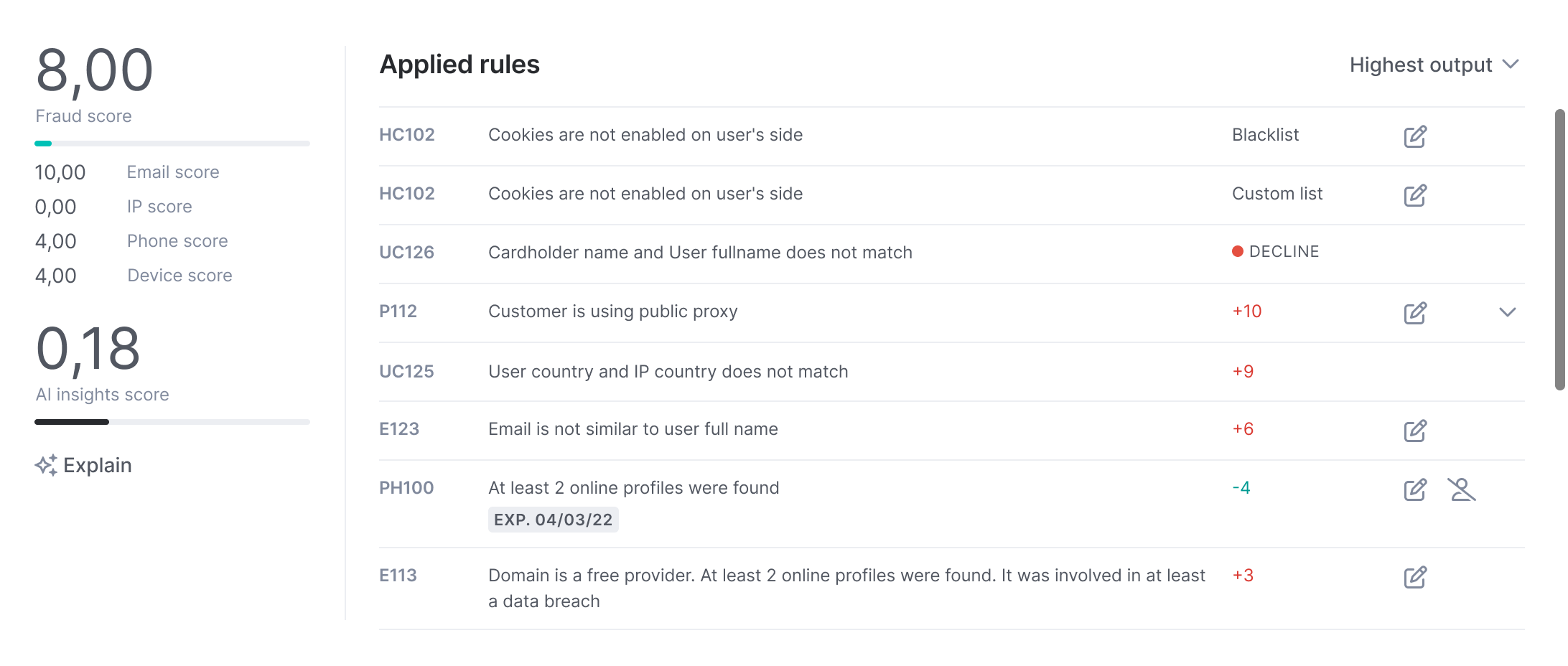

The fraud score is based on the default rules, custom rules you create and the AI rule suggestions you deploy. In the Applied rules widget, you can review the transaction’s fraud score and the rules it triggered.

AI and machine learning algorithms power the AI insights score and the email and phone global network scores. These models learn patterns from millions of events and SEON’s global fraud consortium.

Why do we use both?

When you're just getting started, rules provide immediate control and transparency, helping you act fast while the system begins learning from your data. As SEON’s AI Insight score observes more labeled transactions, it becomes increasingly tailored to your unique customer behavior, fraud patterns and operational context. Over time, the combination of rules and machine learning delivers deeper precision, better context and smarter fraud decisions.

Here’s what each score tells you

| Score type | What is does | Can it use the score in the rules engine? |

| Fraud score | Analyzes the rules you’ve implemented and the overall fraud score calculated by summing the values assigned by all the triggered rules. Only rules that include an action to increase or decrease the fraud score contribute to the final total. This score serves as the primary metric for decisions, whether to approve, review or block an event. | Yes |

| AI insights score | Initially derived from SEON’s global AI and machine learning model for the first ~1,000 events; subsequently adapted using your organization’s labeled data and decisions made, this score uses algorithms to analyze all of your fraud data signals including SEON’s advanced digital footprint, device intelligence and consortium network data. Unless a rule is configured to do so, this score does not impact the fraud score or transaction state by default. You can choose to use this as a second layer of defense and begin incorporating the AI Insights score into the fraud score and Scoring Engine once it is sufficiently trained. | Yes |

| Email & phone network scores | Predicts fraud likelihood based on signals and metadata tied to either the transaction phone or email, alongside SEON consortium data. Includes Fraudulent Network History, showing past fraud appearances across the SEON network. | Yes |

| IP score | Evaluates IP-based risk (VPN, TOR, datacenter, etc.) across the SEON network and is based on rules. | Score only (not fraudulent network history) |

| Device & OS score | Flags anomalies in fingerprint, OS and browser setups that are based on rules. | No |

How should analysts interpret these scores

Start with the fraud score

Think of this as your first layer of defense. It’s composed from rules and explainable models you or your team can actually tune. If it’s high, that usually means something’s off and fast action might be needed. It’s the most automation-friendly and easiest to justify in an audit.

Then check the AI insights score

This score identifies subtle and complex patterns that standard fraud models may miss, making it especially valuable in edge cases. For instance, if the Fraud Score is relatively moderate, suggesting some risk but not enough to warrant a block, and the AI Insights Score is notably higher, that gap should prompt a deeper investigation. In these moments, the AI Insights Score serves as a secondary layer of analysis, offering context based on behavioral patterns and digital footprint signals. Its output depends heavily on how your team configures the system and how consistently you train the model using labels. SEON’s AI score includes clear signal explanations, showing what contributed to a higher score, such as anomalies or risk indicators, and what helped reduce it, like signs of legitimate user behavior. This allows analysts to make more informed and confident decisions.

Email & Phone network scores: A gut check

These are your “does this email or phone number feel legit?” scores. They tap into a rich pool of digital footprint data plus our global fraud history, even if you haven’t seen this customer before. If they come in high, that’s your signal: this contact info is behaving like known fraud.

Use IP and device scores to paint the full picture

While these individual scores shouldn’t be thought of as standalone scores because they only tell a fraction of a person's digital identity, they power a lot of what’s behind the scenes. See someone using an emulator, spoofed browser or IP from a known proxy network? That helps you decide if something looks legit weird or weird enough to block.

Bottom line?

Don’t think of scores as one-and-done answers. Think of them as signals in a conversation — each one adds a little more color to the story. Start with the high-signal stuff like the Fraud Score and the AI Insights score. Then layer in the rest to either confirm your hunch or spot something you might’ve missed.

Best practices for score interpretation

Use in combination: No single score should dictate outcomes in isolation. Corroborate risk signals across vectors (e.g., high IP score + fraudulent history).

Adjust for customer segment, product and geographies: Score thresholds may vary across customer segments, products and geographies

Label feedback loop: Continuously label and feed outcomes into SEON to improve the AI Insights score.

Monitor score drift: Watch for systemic changes that alter baseline scores (e.g., new proxy services or mobile OS updates).