Labels vs tags

Updated on 15.12.25

9 minutes to read

Copy link

Unlocking continuous accuracy: why labels are required for accurate risk decisioning

Even with the richest real-time signals, risk engines can only go so far in accurate detection. Without a robust feedback mechanism, even the best-in-class models will plateau, never learning the nuances of your business, your customers or your evolving fraud tactics. That’s where labels come in.

While tags help categorize transactions and users — and add valuable business and analytics context — they don’t inform models directly. In contrast, labels feed verified “ground truth” outcomes back into SEON’s AI engine, enabling continuous learning and refining the system’s ability to distinguish between legitimate and fraudulent activity.

Drive precision with real-world outcomes

Models trained on live, business-specific outcomes stay razor-sharp. Labels capture both the decision on an alert or transaction (approve or decline) and the reason behind it, giving the AI and machine learning engine the detailed context it needs to uncover complex patterns and emerging fraud tactics.

By harnessing a wide spectrum of real-time signals and precise, label-driven feedback, models continually improve fraud detection accuracy, minimize false positives and directly protect revenue while enhancing customer experience.

What are labels?

Labels are verified outcomes that note the final “good” or “bad” verdict on each alert and transaction based on downstream confirmations. Each alert and transaction can carry a single label that precisely captures the root reason.

Below are examples of generic labels that can be applied through initial label automation in lieu of transaction states.

- Positive label: Marked as approved

- Negative label: Fraud

If it is possible to unearth more of the root cause, more specific labels can be applied.

- Positive labels: Verified payment, On-time payment

- Negative labels: Bonus abuse, Chargeback fraud, Multi-accounting, Second payment default

How labels work

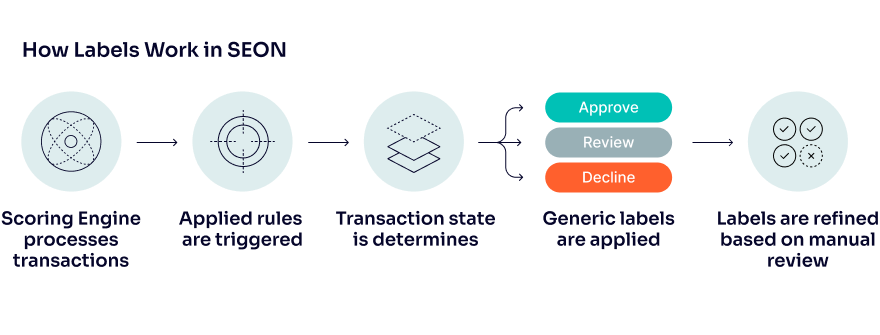

When a transaction is processed through the scoring engine in SEON, it automatically triggers all applicable rules. These rules shape the final risk score assigned to the transaction and determine the transaction’s initial state: Approve, Review or Decline.

But this is only a preliminary assessment. After completing your manual review, you can apply labels to record your final decision, which will be used to train our algorithms.

How to set up labels in SEON

1. Connect to the Label API

Implement SEON’s Label API v2 to push verified labels in bulk or real-time. Labels overwrite initial transaction states (e.g. approve, review or decline), directly influencing both the AI insights score and rule suggestions.

2. Select the applicable labels you plan to use

In order to manually adjust labels when you are reviewing alerts or transactions, you need to first select all the labels you plan to use that are applicable to your use cases. To do this, you need to navigate to AI & Machine Learning Settings, select Label API v2 and turn on access to the labels you wish to use.

3. Pick the most applicable label for each transaction

After you have reviewed a transaction or alert, adjust the transaction state to apply a more specific label use case than the generic positive and negative labels that are applied automatically. This will give the model more clarity and detail about the specific outcome of your transaction, so it can continuously learn and improve automatic labeling.

4. Downstream impacts of labels

Labels feed into AI and machine learning models as explicit “positive” or “negative” signals based on the use case. Your applied rules, AI Insights score and rule suggestions all adapt based on this feedback loop.

How labels affect the AI Insights Score

The AI Insights score uses labels to refine its probability scoring (0–100) and can fully automate decisions above or below set thresholds. The model also translates patterns into human-readable AI-based rule recommendations, complete with accuracy metrics you can deploy directly in SEON.

Best practices for labeling

1. Automate labeling to enable continuous feedback loops

Automate label ingestion via the Label API V2 immediately after your systems confirm an outcome. Faster label flow accelerates model retraining on new fraud patterns.

2. Use precise labels

Use the most specific label available (e.g., bonus abuse, account takeover) rather than a generic “fraud” tag. Granularity yields fine-tuned, high-precision rules and predictions.

3. Balanced feedback

Add labels to both approval and decline scenarios because models require a mix of good and bad examples to learn effectively. If you leave your model starved of legitimate transaction labels, it may skew overly conservative.

Scenarios in action

Approved by rules, flagged in review

A transaction passes your rule-based automation and is approved; however, due to alert trigger or automated actions you have set up, it could be flagged for review. While initially it may be labeled as Marked as approved, however, after investigation, it could be determined to apply a more specific label such as Account takeover. After this new label is applied, the model will learn this feedback and apply this label to future transactions as applicable, despite the rules that were triggered.

Declined by rules, verified legitimate

Rules fire on an unusual velocity pattern, but analysts confirm it’s genuine. Label Marked as approved so models reduce false positives over time.

Review state ambiguity

For “Review” states, apply the label that most precisely captures the final decision, ensuring the model knows whether to reinforce “approve” or “decline” in similar edge cases.